How to implement multi-tenant platform with .NET Aspire and Azure Container Apps?

We started to build a service totality for a specific domain area with a small team a while ago. The foundation of the solution was .NET Aspire and Azure Container Apps Environment. We saw the potential of this technology combination already soon. This then raised questions about how to scale up the platform to support multiple different domain services. Probably later several teams would start developing their own services on this platform.

How to design the architecture of the platform so it supports multiple independent teams and domain services. We liked a lot how the combination of .NET Aspire and Azure Developer CLI boosted the development experience and deployment of containerized applications to Azure Container Apps Environment. We would consider this technology combination as a base of the platform.

This blog post presents how to create a multi-tenant platform using .NET Aspire and Azure Container Apps Environment.

Platform design guidelines

We set the following design criteria for the platform:

- Enhanced developer experience and productivity.

- Less working with infrastructure and deployment pipelines, more developing the application, and solving business problems. Increased time to market.

- Shared deployment pipeline templates and Azure infrastructure modules.

- Simplified, partly shared, and cost-efficient Azure Infrastructure for application hosting.

- Microservices can decide whether to use the Dedicated or Consumption resource model.

- Clear domain boundaries and ownership for each domain service

- Each domain service has its own repository for source control, deployment pipelines (using shared templates), and Azure resources (Resource Group).

- Isolated data storage.

Multitenancy models in Azure Container App

Microsoft has published a good article about considerations for using Azure Container Apps in a multitenant solution. The article presents the following Azure Container Apps multitenancy models which determine the required level of isolation:

- Trusted multitenancy by using a shared environment. This model might be appropriate when your tenants are all from within your organization.

- Hostile multitenancy by deploying separate environments for each tenant. This model might be appropriate when you don't trust the code that your tenants run.

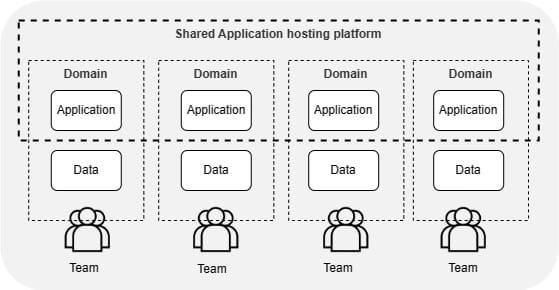

Trusted multitenancy

Optimizing cost, networking resources, and operations for trusted multitenant applications.

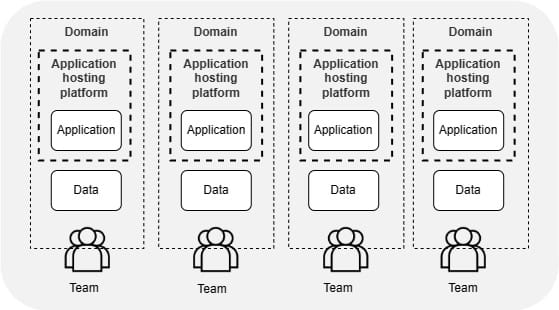

Hostile multitenancy

Running hostile multitenant workloads in isolated environments for security and compliance.

Multi-tenant platform in our case shares the application hosting platform and its infrastructure (trusted multitenancy) for several applications developed by multiple teams. Each application has its own customers and isolated data storage.

Infrastructure of the platform

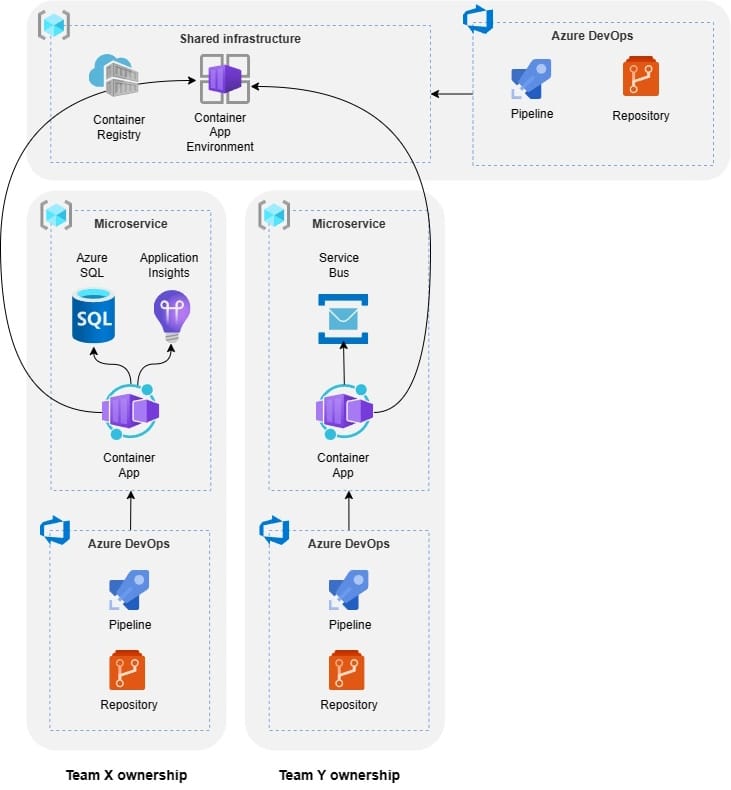

As said, the foundation of the platform is built on top of the trusted multitenancy model where application hosting capabilities are shared.

Shared infrastructure

- Own resource group which contains Azure Container Registry and Azure Container App Environment resources.

- Azure Container App Environment has predefined Workload profiles for microservices of the platform. Setup has predefined Dedicated and Consumption profiles.

- Own source control repository and deployment pipelines.

- The source control repository contains IaC code to create the shared infrastructure, shared YAML templates, and a pipeline to publish shared Bicep modules to the Azure Container Registry.

The infrastructure of domain-specific microservices

- Each microservice has its own resource group where all domain-specific Azure resources are located. This enables resources like data storage to be completely isolated.

- Azure access rights are handled at the resource group level. Teams have access only to their own resource group.

- Own source control repository and deployment pipelines.

- As said, applications are hosted in the shared Azure Container App Environment. The actual domain-specific Container App resource is located in a microservice-specific resource group.

- Each microservice decides independently container-specific configurations like replication, ingress, scaling rules, and resource capacity in the Manifest YAML.

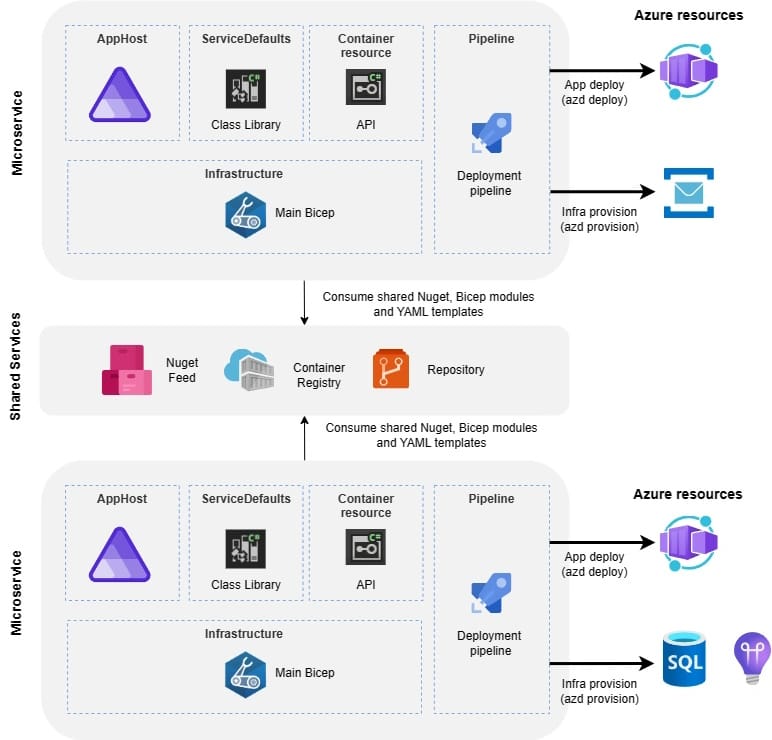

.NET Aspire solutions in the platform

The foundation of the solution is that each microservice solution is independent and separate teams are developing the code in their own source control repositories. Aspire application model is enabled on each microservice solution. Practically it means that each solution has own AppHost orchestration project which is responsible for connecting and configuring projects and services in your solution.

Shared bicep modules

Each microservice has its own main.bicep file which orchestrates the creation of domain-specific Azure infrastructure. All microservice-specific resources are defined in main.bicep file except for Container Apps. Publishing the containers to Azure Container Registry and deployment to Azure Container App Environment is handled automatically by Azure Developer CLI.

Microservices don't have their own bicep modules (except main.bicep). All resources are created using shared reusable bicep modules from Azure Container Registry.

For example creation of Application Insights resource:

module logAnalyticsWorkspace 'br/repo:bicep/modules/loganalyticsworkspace:v0.1' = {

name: logAnalyticsWorkspaceName

scope: resourceGroup(resourceGroupName)

dependsOn: [

rg

]

params:{

location: location

tags: tags

name: logAnalyticsWorkspaceName

}

}Shared Azure Container Registry to publish Container Apps

As said, the platform uses a shared Azure Container App Environment which is created in a shared infrastructure pipeline. Main.bicep uses outputs to determine Azure Container App Environment id value to environment variables. Later on, the Container manifest reads this value from environment variables.

resource containerAppEnvironment 'Microsoft.App/managedEnvironments@2023-08-01-preview' existing = {

scope: resourceGroup(sharedRgName)

name: containerAppEnvironmentName

}

output AZURE_CONTAINER_APPS_ENVIRONMENT_ID string = containerAppEnvironment.idManifest

Container App-specific manifest file has reference to Azure Container App Environment Id. This configuration determines which is the Azure Container App Environment where the container is deployed.

location: {{ .Env.AZURE_LOCATION }}

properties:

environmentId: {{ .Env.AZURE_CONTAINER_APPS_ENVIRONMENT_ID }}

workloadProfileName: DedicatedShared YAML-templates

Shared YAML templates are published to an infrastructure repository where microservice-specific deployment pipelines can consume those templates. The usage of Azure Developer CLI makes pipelines very compact.

Install .NET 8 and Aspire template:

steps:

- task: Bash@3

displayName: Install azd

inputs:

targetType: 'inline'

script: |

curl -fsSL https://aka.ms/install-azd.sh | bash -s -- --version daily

- pwsh: |

azd config set auth.useAzCliAuth "true"

displayName: Configure AZD to Use AZ CLI Authentication.

- task: UseDotNet@2

displayName: "Setup .NET 8"

inputs:

version: "8.0.x"

- pwsh: |

dotnet workload install aspire

displayName: Setup .NET AspireInfrastructure provision template:

parameters:

- name: environment

type: string

default: test

- name: serviceConnection

type: string

steps:

- task: azureCLI@2

displayName: Provision Infrastructure

inputs:

azureSubscription: ${{ parameters.serviceConnection }}

scriptLocation: InlineScript

scriptType: pscore

TargetAzurePs: LatestVersion

inlineScript: |

azd provision --environment ${{ parameters.environment }} --no-prompt --no-state

Application deployment template:

parameters:

- name: environment

type: string

default: test

- name: serviceConnection

type: string

steps:

- task: azureCLI@2

displayName: Deploy to ${{ parameters.environment }}

inputs:

azureSubscription: ${{ parameters.serviceConnection }}

scriptLocation: InlineScript

scriptType: pscore

TargetAzurePs: LatestVersion

inlineScript: |

azd deploy --environment ${{ parameters.environment }} --no-prompt

Microservice-specific solution structure

When everything is in place folder structure of the domain-specific solution looks something like this (of course the content under the src-folder varies):

├── .azdo

│ ├── pipelines

│ │ ├── publish.yaml

├── .azure

│ ├── dev

│ │ ├── .env

│ └── config.json

├── infra

│ ├── main.bicep

│ └── main.parameters.json

├── src

│ ├── Domain.Api

│ ├── Domain.AppHost

│ ├── Domain.ServiceDefaults

├── Domain.sln

├── azure.yamlConsiderations of this setup

Managed Identities for each Container App

You should create a User Assigned Managed Identity for each Container App that you can easily limit e.g. which resource can access microservice-specific data storage.

Virtual network

Azure Container Apps has Virtual Network support to facilitate communication between containers and external services. In a multi-tenant model, you may need to implement network policies to restrict communication between tenants' applications and domain-specific resources.

Log Analytics Workspace

Azure Container Apps Environment has its own Log Analytics Workspace where System and Container specific console logs are persisted. You should consider carefully where to locate this shared Log Analytics Workspace. It should be in a place where all teams can have access to follow their own Container Apps logs. The preferred way is also to create Application Insight with Log Analytics Workspace integration for the application traces etc. into a microservice-specific resource group.

Cost monitoring

If you want to monitor costs at a microservice (=domain service) level you need to combine costs also from the shared infrastructure Resource Group if a Dedicated plan is used.

Resource Allocation

As said earlier, Azure Container Apps allows you to specify resource limits for each container instance, including CPU, and memory. You can adjust these settings in the YAML manifest. In a multi-tenant environment, you'll need to carefully allocate resources to ensure that one tenant's application doesn't consume excessive resources and impact the performance of other tenants' applications.

Comments