How to create an Event Catalog?

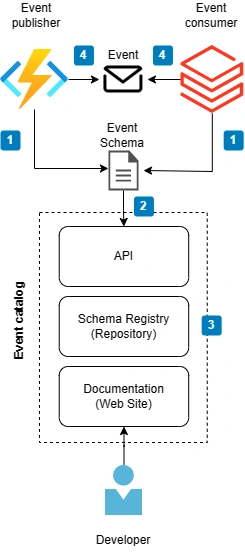

I recently worked in a project where event-driven architecture was used to distribute different events to multiple consumers. During the project, we spend some time thinking about how to document events easily and how to create a centralized repository for event schemas. I share some thoughts about event catalog in this blog post.

What is event-drivenI architecture?

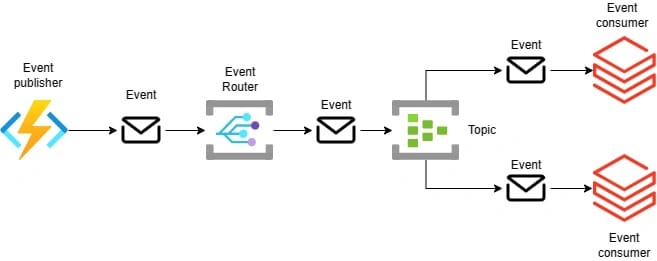

In an event-driven architecture, components communicate with each other through events. Events can be triggered when a significant change has happened in the application state. Event-driven architecture enables nearly real-time processing, decoupling of components, and component-specific scaling.

About terminology

Open-source tool for Documentation Site

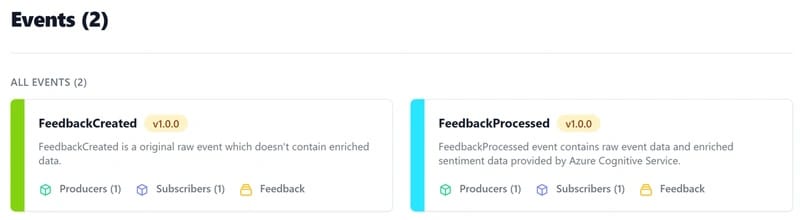

An open-source project called EventCatalog is a comprehensive and free event-driven architecture documentation tool. This tool has a comprehensive list of built-in features that enable to show domain boundaries, event schemas, event samples, and dependencies. Markdown syntax and Mermaid diagrams are also supported which enables you can enrich the event documentation as much as you want.

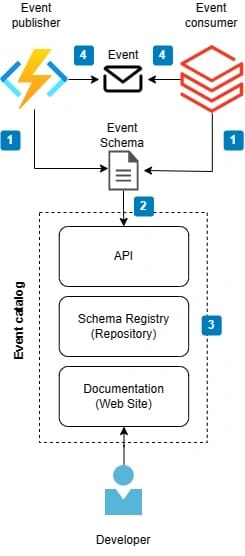

EventCatalog is an advanced event documentation tool and it doesn't provide API capabilities to share event schemas for publishers and consumers. You need to create the API layer on your own.

You can find comprehensive instructions on how to install and configure EventCatalog from here. In Azure, you can host EventCatalog static web site e.g. in Static Web Site, Blob Storage, or in App Service.

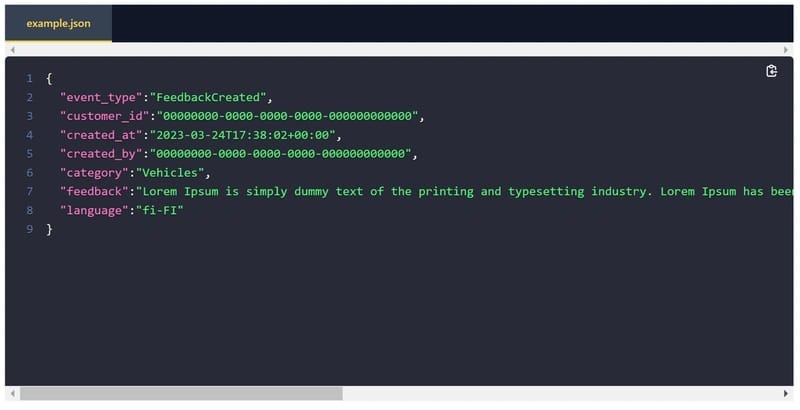

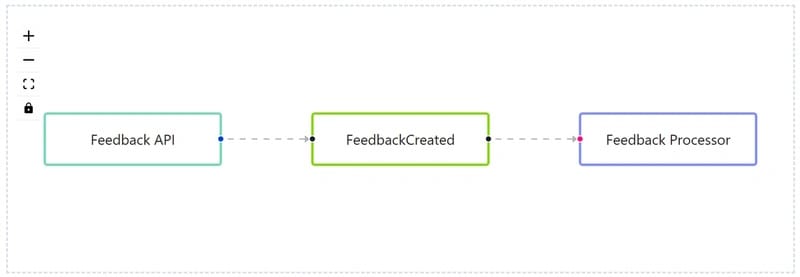

Some screenshots from EventCatalog

Events listing

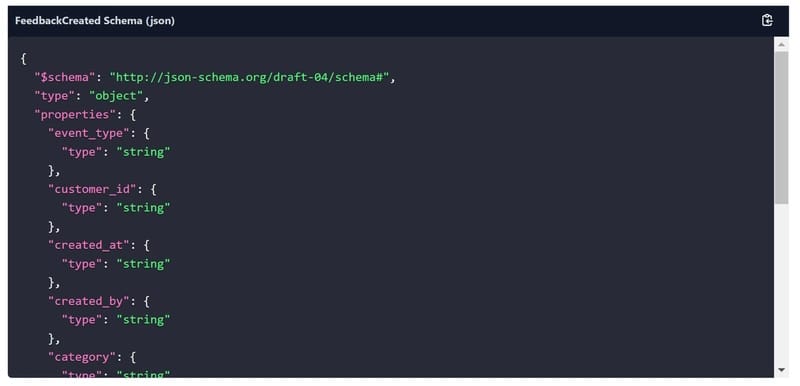

Event Schema viewer

Event sample viewer

Dependency visualizer

Thoughts about Event Catalog implementation in our project

Requirements

- One centralized repository for event schemas is required

- API or Client SDK is required to enable event publishers and consumers to fetch event schemas programmatically

- Documentation site that can present event schemas, samples, and diagrams of event publishers and consumers

Event documenting tool

EventCatalog documentation tool provided so many great features and it matched quite well for our needs so it was clear that we would use it. We plan to host the EventCatalog site in Azure Blob Storage (Static Web Site). The capability to share event schemas via API was the only thing that was missing so we needed to consider other ways to fill this requirement.

Next, I'll present some concepts that we considered for implementation during the discovery work.

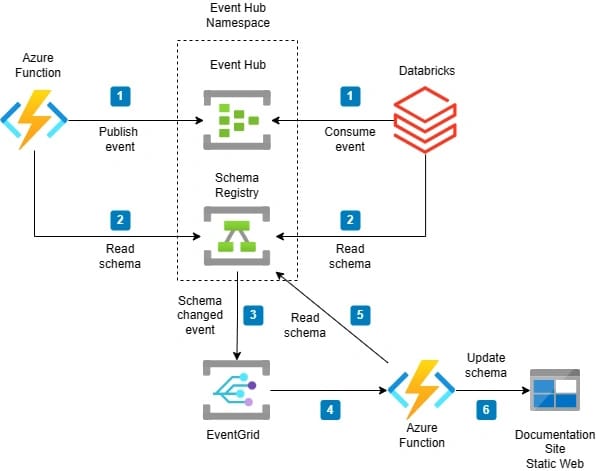

First idea

Event Hub is a fundamental part of the event-driven architecture in our project. During the project, we noticed that the schema registry (repository) is actually already part of the Event Hubs. This was great because we just needed to solve how to update schemas automatically to EventCatalog (documentation site) when the schema was changed in Event Hubs schema registry (master data source).

Note that the schema registry is available only in the Basic or higher pricing tier of Event Hubs.

EventCatalog uses the below folder structure so schemas files should be fairly easy to update to this structure.

├── events

│ ├── FeedbackCreated

│ │ └──versioned

│ │ │ └──0.0.1

│ │ │ └──Examples

│ │ │ │ └──example.json

│ │ │ └──index.md

│ │ │ └──schema.json

│ │ └──index.md

│ │ └──schema.jsonEvent Hub has great Client SDK / API for schema registry which solves the requirement of fetching schemas programmatically. You can find samples of how to use it from here.

My first thought was that perhaps the schema registry in Event Hub supports Event Grid to distribute events e.g. when the schema was updated. Azure Function could then subscribe to those events and fetch the new schema from the schema registry via Client SDK and update it to the EventCatalog site's specific folder structure. Unfortunately, CaptureFileCreated was the only supported event type.

So this wasn't a feasible solution.

Second iteration

In this second iteration main goal was still to use the schema registry of Event Hub as a master source for schemas but schemas were updated to EventCatalog (documentation site) periodically with Azure Function. Azure Function was responsible for fetching the schema data from Schema Registry via Client SDK and then updating schemas to Blob Storage where EventCatalog (documentation site) is hosted.

Pros

- Schema Registry is a built-in feature provided by Event Hubs and custom development is not required

- No need to implement a separate schema registry API because Event Hub's Client SDK / API enables access to schemas programmatically

- Schema reader/updater potentially could create event samples automatically while updating schemas

Cons

- Schema Registry client in Azure.Data.SchemaRegistry Nuget package currently doesn't support fetching all schemas at once. You need to know the name of the schema to retrieve the actual schema. This is not a complete show-stopper but requires some extra work.

- Schema Registry supports AVRO schema format but JSON support is still in preview.

- If you don't use Event Hub in your system architecture, you need to separately provision it to get access to Schema Registry and it generates some small extra costs per month.

- Schema reader/updater Azure Function requires logic to determine which publisher/consumer service is using the event. This is determined by event event-specific index.md file.

This is a possible solution but let's iterate this still a bit more.

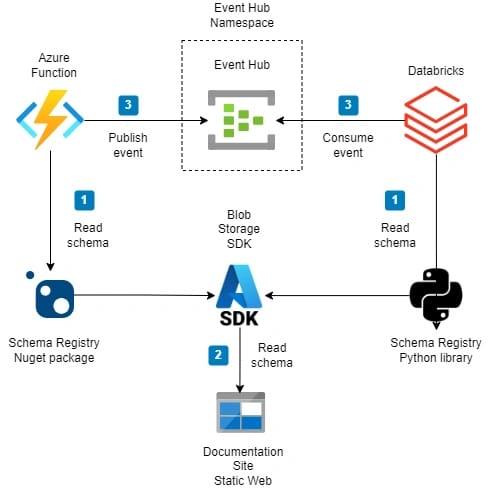

Third iteration

Combining Event Hub's schema registry and open-source documentation site EventCatalog was a bit too complex as stated in the previous iteration so we decided to iterate this a bit more.

In this approach, Event Hub's schema registry was removed and EventCatalog in Blob Storage will be the master data source for event schema data. This approach enables us to use Blob Storage Client SDK / API to fetch schema data from Blob Storage. To make fetching schemas for publishers and consumers as easy as possible, a custom library should be developed. We had different kinds of systems as a publisher and consumer so this would require extra work.

This approach enables you can maintain event schemas and event samples in source control of EventCatalog and the CI/CD pipeline will deploy everything to Blob Storage.

Sample event-specific index.md file

---

name: FeedbackProcessed

version: 1.0.0

summary: |

FeedbackProcessed event contains raw event data and enriched sentiment data provided by Azure Cognitive Service.

producers:

- Feedback Processor

consumers:

- Feedback Subscriber

---Pros

- You can maintain event schemas and event samples in the source control of EventCatalog

- Simpler architecture. No need to replicate event schemas from another place because everything is in one place (Blob Storage)

- You don't need Event Hub if it's not used in your architecture

Cons

- Requires more development effort

- Different types of publisher and consumer systems require their own Schema Reader libraries which increase maintenance

Summary

It was pretty difficult to automate completely Event Hub's Schema Registry to work with the EventCatalog documentation site. From these options, I would choose a solution presented in iteration three where the schema registry (repository) is in Blob Storage because the solution is simpler. If you don't need advanced event documentation then the Schema Registry of Event Hub is a good option for you.

Comments