How to stream all Home Assistant events to Azure?

I have been using and testing Home Assistant as a center of home automation for a while now. Currently, I have integrated some motion sensors, temperature sensors, and lights into my home automation hub. I'm in the early phase now with Home Assistant and willing to learn and automate more.

This blog post is all about, how to stream events from Home Assistant Event Bus to Azure Cloud. Home Assistant has good capabilities to create dashboards and charts based on the data, but I wanted to explore the data with Azure tools and services. In this sample, I'll create some dashboards for temperature sensor data (RuuviTag).

Azure has many capabilities for data analytics and this time I wanted to explore more about Azure Data Explorer and how to use it in this case. Microsoft has provided a free cluster for Azure Data Explorer which is perfect for my testing scenario and learning.

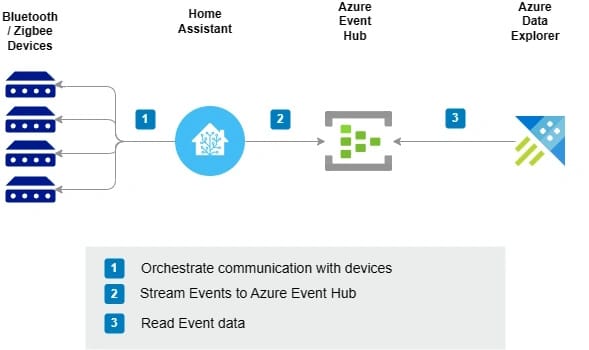

Solution overview

The heart of the system is Home Assistant which is running in the Proxmox Virtualizing platform at my home. Wikipedia has determined Home Assistant as follows:

Home Assistant is free and open-source software for home automation designed to be a central control system for smart home devices with a focus on local control and privacy.

Home Assistant supports a various number of integrations and it receives data from different devices using different protocols (Wifi, Bluetooth, Zigbee, etc.). In this case, temperature data is transmitted to Home Assistant from RuuviTag sensors via Bluetooth. RuuviTag is a wireless Bluetooth sensor node that measures temperature, air humidity, air pressure, and movement.

Azure Event Hubs is a modern big data streaming platform and event ingestion service that operates between Home Assistant and Azure Data Explorer in this case. Azure Event Hub integration in Home Assistant allows one to hook into the Home Assistant Event Bus and send events further to Azure Event Hub.

Lastly, data analytics and visualization services are required to finalize the solution. Azure Data Explorer is a fully managed, high-performance, big data analytics platform that makes it easy to analyze high volumes of data in near real-time. The Azure Data Explorer toolbox gives you an end-to-end solution for data ingestion, query, visualization, and management.

Let's get started

Step 1: Configure Azure Infrastructure for Event Ingestion

Create Azure Event Hub Namespace

This Azure CLI command creates an Azure Event Hub Namespace which is a container for Event Hub instances.

az eventhubs namespace create --name "evhomeautomation" --resource-group "rg-homeautomation" -l "westeurope"Create Azure Event Hub

I'm using the Basic pricing tier and therefore message retention must be configured to 1 day.

az eventhubs eventhub create --name "homeassistant" --resource-group "rg-homeautomation" --namespace-name "evhomeautomation" --message-retention 1

Create SAS policy for Azure Event Hub

SAS policy will be used by the Home Assistant. Send privilege is enough because Home Assistant doesn't need to read or manage the Event Hub instance.

az eventhubs eventhub authorization-rule create --name "homeassistant" --eventhub-name "homeassistant" --namespace-name "hadata" --resource-group "rg-schema" --rights "Send"Step 2: Configure RuuviTag integration to Home Assistant

Open Home Assistant Admin Portal > Select Settings > Devices & Services > Add Integration

Search with the keyword "Ruuvitag"

Now you have integration ready for receiving sensor data from RuuviTag. Besides temperature, RuuviTag sensors provide also air humidity, air pressure, and movement data. This instruction assumes that you have a Bluetooth-capable device in your Home Assistant PC which is already configured.

Step 3: Configure Azure Event Hubs integration to Home Assistant

Open Home Assistant Admin Portal > Select Settings > Devices & Services > Add Integration

Search with the keyword "Microsoft"

Select "Azure Event Hub"

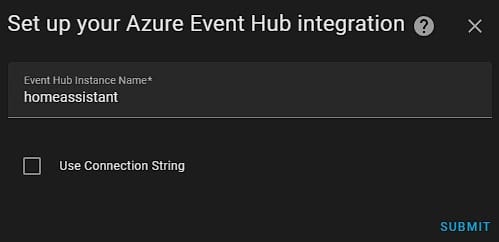

Give Event Hub Instance name. First I made a mistake and inputted here Azure Event Hub Namespace's name which is wrong. You need to input the Event Hub Instance's name.

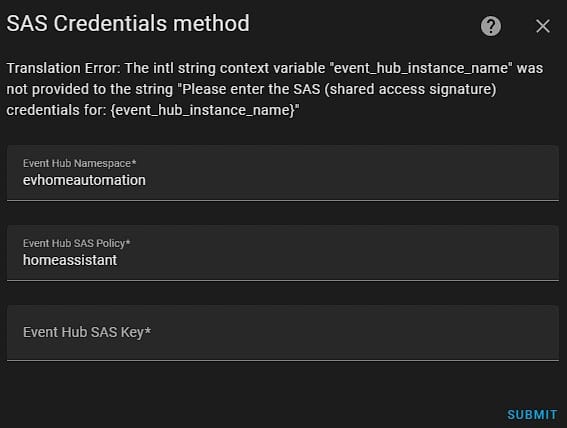

Next Event Hub Namespace name, SAS policy name, and SAS key are given.

After some time Home Assistant will start ingesting events to Azure Event Hub. Note! By default, this integration ingests ALL events from Home Assistant Event Bus to Azure. If you want to filter events that will be sent to Azure, you need to reconfigure the YAML.

Step 4: Configure Azure Infrastructure for Event Analysis

Like said Microsoft offers a free cluster of Azure Data Explorer. It has some quotas which you should know. Also, there are some feature differences between Full and Free clusters. Read more about those differences here.

Free Cluster quotas

| Item | Value |

| Storage (uncompressed) | ~100 GB |

| Databases | Up to 10 |

| Tables per database | Up to 100 |

| Columns per table | Up to 200 |

| Materialized views per database | Up to 5 |

How to create a Free Cluster?

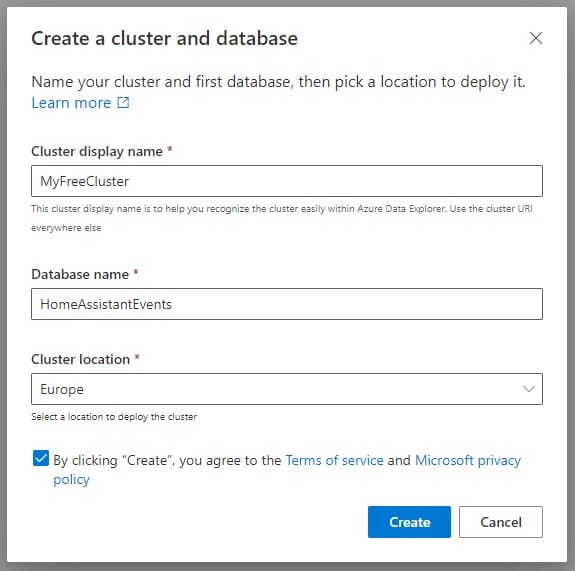

Start Free cluster creation here. First, you need to give names for a Cluster and Database

Next, we'll configure data ingestion from Azure Event Hub.

Data ingestion wizard

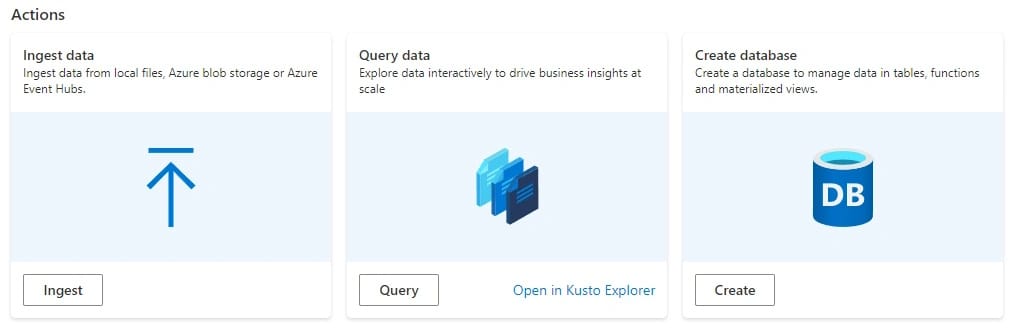

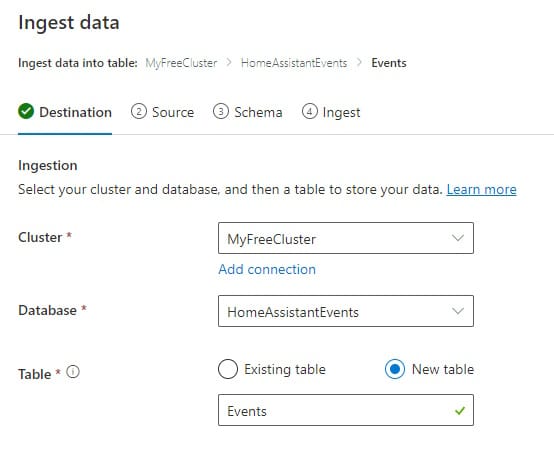

When Cluster is created, select "Ingest data" from the front page.

Decide a name for your database and table where data will be ingested.

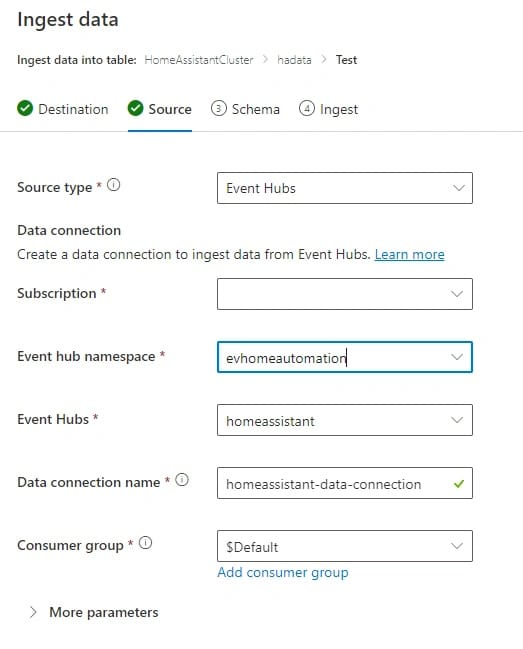

On the Source page, you need to provide Azure Event Hubs connection details.

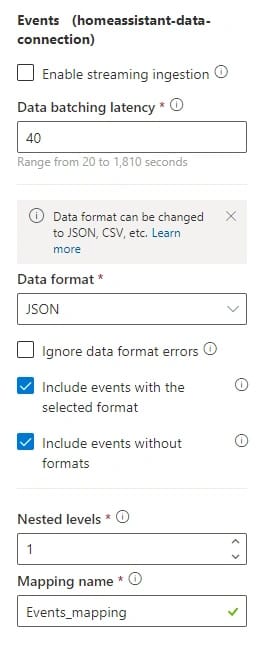

In the next phase, you can determine the Data format. I selected the JSON format.

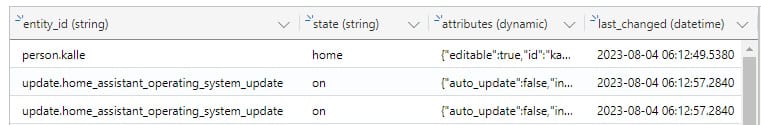

If everything worked as expected, you should see in the preview view Home Assistant events streamed from Azure Event Hub.

About the Home Assistant event data

| Field | Example value | Description |

| entity_id | sensor.ruuvitag_64f5_temperature | Identifier of the entity that has changed. |

| state | 22.02 | State of the entity |

| attributes |

{

"state_class": "measurement",

"unit_of_measurement": "°C",

"device_class": "temperature",

"friendly_name": "RuuviTag 64F5 Temperature"

}

|

Entity specific attributes |

| last_changed |

2023-07-24T06:50:31.581296Z

|

Changed timestamp |

| last_updated |

2023-07-24T06:50:31.581296Z

|

Updated timestamp |

Next steps

Home Assistant event data is now ingested into Azure for later analysis and visualization. In the next blog post, I'll go into more detail about Azure Data Explorer and how to make queries to the data using KQL, and how to create dashboards presenting the temperature data.

Comments